For the Digilent Design Contest, one of the teams created an AssistGlove that senses hand movements and enables intuitive sign language. The Instructable by

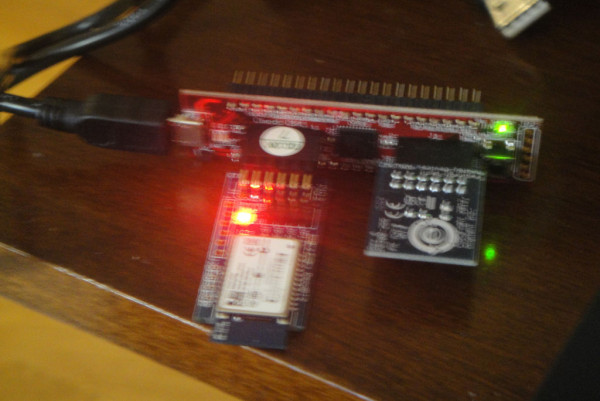

You will need a chipKIT Cmod, a PmodBT2, and a PmodACL2. If you want to really expand the project and allow for further speech, you can use a ZYBO.

You’ll need to use BlueTooth in conjunction with the Cmod and Pmod accelerometers. Measurements are taken , and the X measurement is sent over BlueTooth and received by the PC being used. The received values are shown, and the Cmod’s LEDs blink at different frequencies depending on whether it was moved up or down (i.e., whether X is positive or negative).

Some of the other endeavors on this as yet to-be-completed project are more ZYBO-focused. These include a debugging project, connecting the ZYBO and PmodWiFi, writing a Linux application for ZYBO, and then compiling a speech synthesizer library.

Let us know if you’ve worked on this project!