Comparing digital storage oscilloscopes (DSO’s), like the Analog Discovery 2, can be a bit (pun intended) dry and seems like a battle of “Who’s got the bigger numbers for their specs?”. That is, until you realize how these specs are dependent on each other and the way the scope is operating. The two main features beyond IO channels that most scope designs push are their bandwidth and sampling rate.

Now, there’s also this thing called memory that is needed to store these samples. What I have recently come to learn is that the amount of memory that a DSO can allocate to data acquisition is interconnected to the bandwidth and sampling rate, which makes more sense as we start to unfold it all. I have experienced this parameter referred to as “memory depth”, “memory”, “record length”, “capture memory”, and just “the buffer.” I have also seen it referred to as a “max” value which comes back to the interdependencies of memory, sampling rate, and bandwidth. For now, let’s refer to this value as memory depth. For example, the Analog Discovery 2 has a maximum memory depth (or buffer size as it is referred to in Digilent documentation) of 8192 samples.

On my screen, this picture is actually larger than the device itself.

Okay, so quick equation: Time length of captured signal = Memory Depth / Sample Rate. Cool, so samples divided by samples per second will leave us with a quantity of time. Using the Analog Discovery 2‘s specs for an example, if sampling at the max rate of 100MS/s and using the entirety of the memory depth of 8192 samples we can capture a waveform that is about 82us in length. So, if we have more memory depth, we can capture a longer signal, right? Technically, yes. And with a higher sampling rate, we can achieve clearer resolution at higher frequencies, effectively increasing the scope’s bandwidth, right? Also, technically, yes. However, a scope will not always sample at the max rate and there are situations where a large memory depth can hinder the performance of a scope.

A scope will sample at faster rates as the time base on the scope gets shorter. This is handled automatically by the DSO. What this also means is that the scope will sample at slower rates for longer time bases all relative to the available memory depth. Last quick equation: Memory depth * (number of time divisions / time per division) = sample rate. Using the Analog Discovery 2‘s specs again, 8192 samples with 10 time divisions at 10ms per division, we obtain a sample rate of about 8Ms/s. So, we can see here that at lower time divisions the sample rate decreases. For higher sampling rates at these lower time divisions, more acquisition memory is needed. This, of course, isn’t always necessary, but debugging can get hairy and sometimes can come down to some short, yet infrequent events. This is where a thing called “deep memory” starts to become advertised. Deep memory allows the user to add (typically manually) more memory to the acquisition buffer – but for a trade-off in performance.

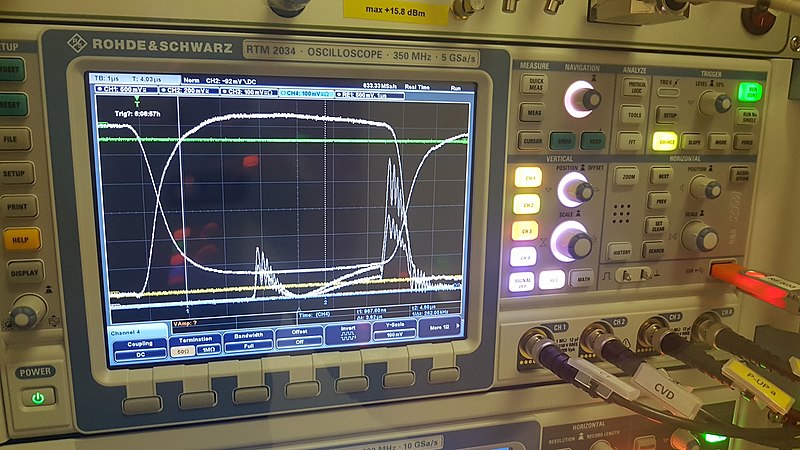

Now, there is still another factor at play: the amount of time it takes for a scope to process the acquired data, display the signal and wait for the next trigger. During this time, the scope is not collecting any new data! I have seen this parameter referred to as the “update rate”, “refresh rate”, or “dead time.” With a deeper memory depth, the scope has a longer update rate. This can cause issues when looking for infrequent anomalies or quick bugs in the signal, like missing the event altogether if that event is occurring during this dead time or if the dead time becomes longer than the acquisition time.

Bottom line is this: understanding when to push certain parameters of your scope and what may be compromised when doing so. Is a higher sampling rate ideal? Is deep memory depth optimal? Is an infrequent event of concern? Understanding how memory depth, sampling rate, bandwidth, and refresh rate all relate to your acquisition and where your analysis needs to be strong will provide you with a plan for the best results. So far, the Analog Discovery 2 has been a portable workhorse for me.