Compiler design is a complex topic, and is considered specialized knowledge even among professional software engineers. NI LabVIEW software is a multiparadigmatic graphical programming environment that incorporates a wide variety of concepts including data flow, object orientation, and event-driven programming. LabVIEW is also exceedingly cross-platform, targeting multiple OSs, chipsets, embedded devices, and field-programmable gate arrays (FPGAs).

National Instruments website has a wealth of information about the LabVIEW compiler. In this blog post, I have done my best to provide a simplified explanation.

1. LabVIEW Compile Process

First in the compilation of a VI is type propagation, which is responsible for resolving implied types for terminals that can adapt to type and detect syntax errors. After type propagation, the VI is converted from the editor model into the data flow intermediate representation (DFIR) graph used by the compiler.

The compiler executes several transforms, such as dead code elimination, on the DFIR graph to decompose, optimize, and prepare it for code generation. The DFIR graph is then translated into a Low-Level Virtual Machine (LLVM) intermediate representation (IR), and a series of passes is run over the IR to further optimize and lower it – eventually – to machine code.

2. DFIR Provides a High-Level Intermediate Representation

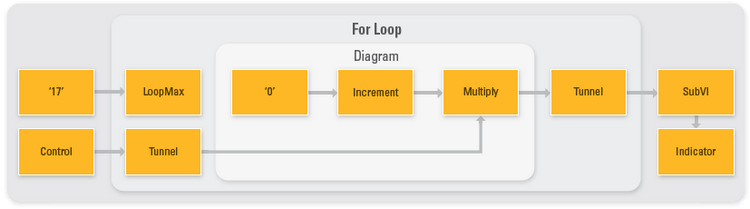

DFIR is a hierarchical, graph-based IR of block diagram code. Similar to G-code, DFIR is composed of various nodes with terminals that can be connected to other terminals. Some nodes, such as loops, contain diagrams, which may in turn contain other nodes.

The picture above shows an initial DFIR of a simple VI. As the compile progresses, DFIR nodes may be moved, split apart, or injected, but the compiler will still preserve characteristics, such as parallelism, inherent in the developer’s G code.

The picture above shows an initial DFIR of a simple VI. As the compile progresses, DFIR nodes may be moved, split apart, or injected, but the compiler will still preserve characteristics, such as parallelism, inherent in the developer’s G code.

3. DFIR Decompositions and Optimizations

Once in DFIR, the VI runs through a series of decomposition transforms that reduce or normalize the DFIR graph. After the DFIR graph is thoroughly decomposed, the DFIR optimization passes begin. There are more than 30 decompositions and optimizations that can improve the performance of LabVIEW code.

4. DFIR Back-End Transforms

After the DFIR graph is decomposed and optimized, the back-end transforms execute. These transforms evaluate and annotate the DFIR graph in preparation for ultimately lowering the DFIR graph to a LLVM IR.

The clumper is responsible for grouping nodes into clumps, which can run in parallel. The inplacer identifies when allocations can be reused and when a copy must be made. After the inplacer runs, the allocator reserves the memory that the VI needs to execute.

Finally, the code generator is responsible for converting the DFIR graph into executable machine instructions for the target processor.

5. LLVM Provides a Low-Level Intermediate Representation

LLVM is a versatile, high-performance open source compiler framework. The LLVM is now widely used both in academia and industry due to its flexible, clean API and nonrestrictive licensing.

LabVIEW uses LLVM to perform instruction combining, jump threading, scalar replacement of aggregates, conditional propagation, tail call elimination, loop invariant code motion, dead code elimination, and loop unrolling.

6. DFIR and LLVM Work in Tandem

While DFIR is a high-level IR that preserves parallelism and LLVM is a low-level IR with knowledge of target machine characteristics, the pair works in tandem to optimize the LabVIEW code developers write for the processor architecture on which the code executes.

Thank you for reading my blog post, and for a more technical explanation about how LabVIEW works follow this link. If you are interested in trying out LabVIEW for yourself, you can purchase a copy of LabVIEW 2014 Home Edition which includes everything you will need to run LINX 3.0. Please comment below with any questions or comments you may have.